Revisiting AlexNet with Modern Optimization

Achieving 95.7% accuracy on CIF-AR-10 by updating a classic CNN architecture.

By Metanthropic, Ekjot Singh

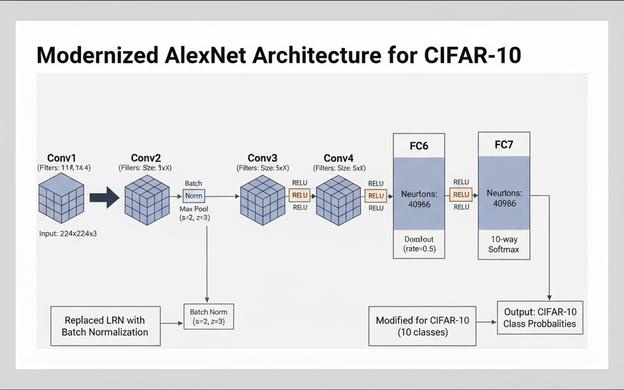

This project revisits the classic 2012 AlexNet, a foundational deep convolutional neural network, and adapts it for the CIFAR-10 dataset[cite: 1, 2]. The primary goal was to explore how this pioneering architecture performs when enhanced with a suite of modern optimization and regularization techniques developed over the last decade[cite: 20, 25].

By integrating contemporary best practices, we demonstrate the enduring relevance and power of the original design[cite: 23]. The modernized network, which retains the core five convolutional and three fully-connected layers, achieved an impressive 95.7% accuracy on the CIFAR-10 test set[cite: 3, 141].

Key Modernizations

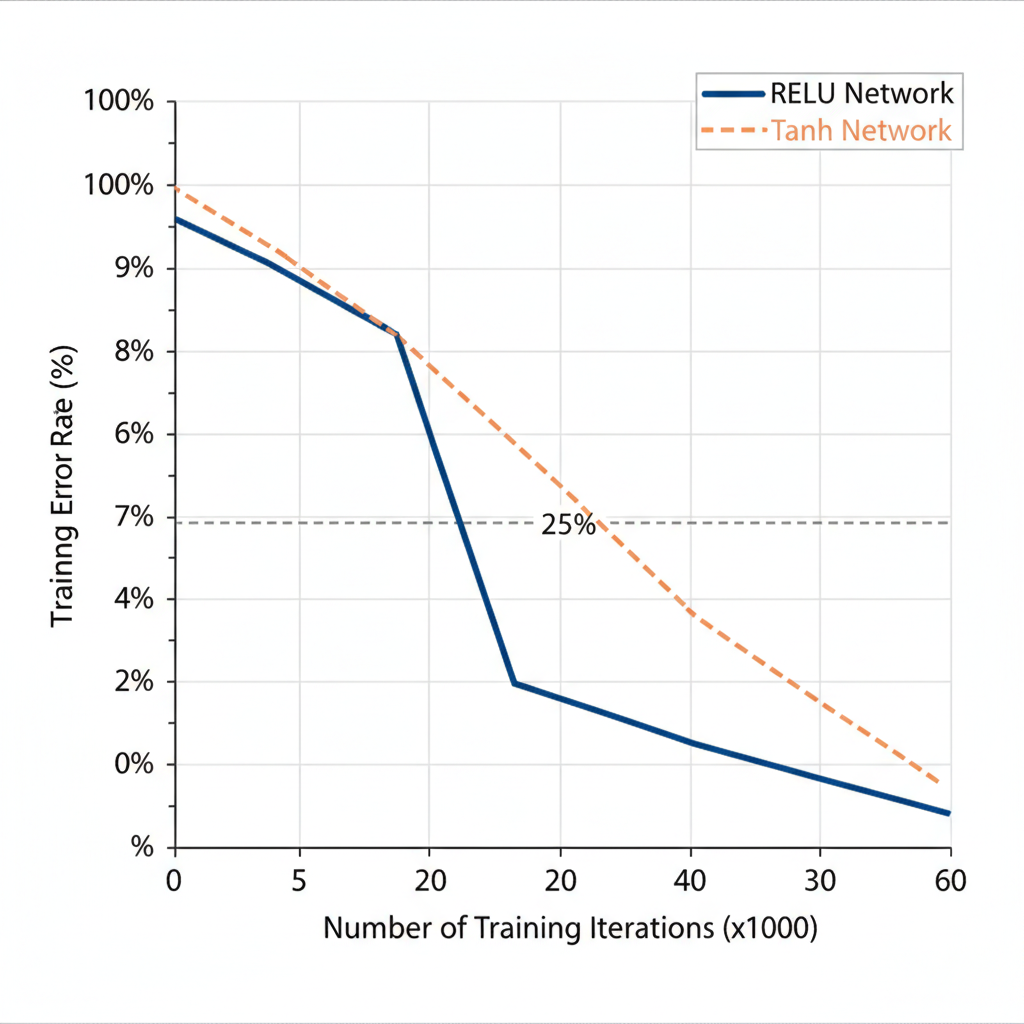

Several key upgrades were instrumental in achieving high performance and training stability. We replaced the original Local Response Normalization with Batch Normalization to accelerate training and improve generalization[cite: 67, 69]. The original SGD-based learning rule was substituted with the more efficient Adam optimizer[cite: 126].

To combat the significant risk of overfitting on the smaller CIFAR-10 dataset, we employed a combination of techniques[cite: 100]. On-the-fly data augmentation (random flips, rotations, and zooms) artificially enlarged the dataset, while the “dropout” method was retained in the fully-connected layers to prevent complex co-adaptations between neurons[cite: 103, 108, 113, 118].

To ensure reproducibility and facilitate further research, the complete implementation and final trained model are publicly available.

- 📄 Paper (PDF): Read the full paper on GitHub

- 💻 Code Repository: View the source code on GitHub

- 📓 Kaggle Notebook: The full training process can be accessed at this Kaggle link.

- 🤖 Hugging Face Model: The final trained model is hosted on the Hugging Face Hub, available at this Hugging Face link.

References

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Advances in neural information processing systems, 25. [cite: 179]

- Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009). ImageNet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition. [cite: 175]

- Krizhevsky, A. (2009). Learning multiple layers of features from tiny images. Master’s thesis, University of Toronto. [cite: 177]

- Hinton, G. E., Srivastava, N., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. R. (2012). Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580. [cite: 176]

- Nair, V., & Hinton, G. E. (2010). Rectified linear units improve restricted boltzmann machines. 27th International Conference on Machine Learning (ICML-10). [cite: 183]

- LeCun, Y., Huang, F. J., & Bottou, L. (2004). Learning methods for generic object recognition with invariance to pose and lighting. 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. [cite: 180, 181, 182]